SKILLS

Figma Community Resource, AI Design Research, Concept Design, UX Design, Systems Thinking, Design Strategy

TEAM

4 UX Designers

1 PhD Researcher

1 Faculty Staff

TIMELINE

March 2025 - November 2025

9 Months

CONTEXT

The AI Concept Envisioning (ACE) Toolkit is a modular ideation and reflection system designed to support designers during early AI concept development. Built as a Figma Community resource, it integrates directly into designers’ existing workflows to help them articulate AI behavior, explore human–AI interaction patterns, and externalize design reasoning through shared visual artifacts.

The toolkit is used during early ideation and concept framing, before teams commit to specific AI directions. It supports both individual reflection and collaborative critique by providing structured prompts, reusable components, and visual scaffolding that make abstract AI decisions discussable and tangible.

ROLE

Graduate Design Assistant | UX Designer

I led the systems design for the toolkit, defined its structure and flow, and facilitated design sessions to understand where designers struggle during AI concept ideation. I created mid to high fidelity prototypes in Figma, built storyboard walkthroughs to visualize early workflows, and presented findings and iterations for faculty review. Over 9 months, I collaborated with 4 design researchers using a research-through-design approach to explore how reflective scaffolds, visual systems, and structured prompts could support more intentional AI concepting.

IMPACT

Published toolkit to the Figma Community, driving adoption directly within designers' workflows.

Strengthened early AI decision making by helping designers articulate AI behavior, values, and implications.

Supported individual reflection and collaborative critique through modular and reusable system.

Co-authored an academic publication documenting the toolkit's framework for the broader design community.

PROBLEM

Designers want better ways to envision, reflect on, and critique their own AI concepts early in the process.

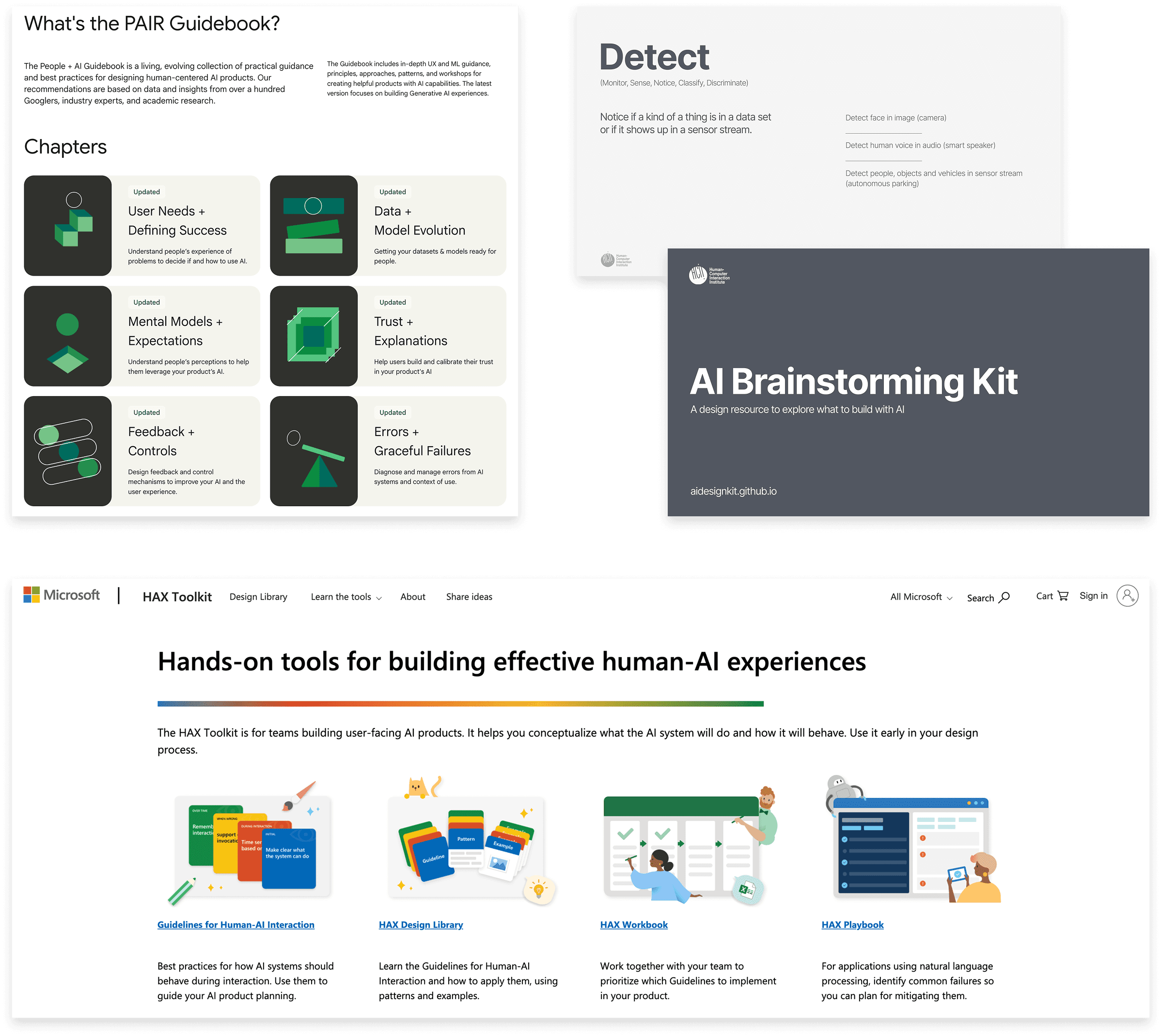

Designing for AI often feels ambiguous and inaccessible. Most designers lack frameworks for reasoning about AI behaviors, data-driven interactions, and value tensions that emerge when humans and AI collaborate. Existing resources, such as Google’s People + AI Guidebook or Microsoft’s HAX Toolkit, tend to support advanced teams and offer limited guidance at the ideation stage.

Google’s People + AI Guidebook, CMU HCII AI Brainstorming Kit, and Microsoft’s HAX Toolkit

WHY EXISTING TOOLKITS FELL SHORT

Existing AI design resources emphasize later-stage evaluation, providing limited support during early concept ideation when core decisions are formed.

Many tools assume prior AI literacy, limiting accessibility for designers who need scaffolding to reason about AI behavior and tradeoffs.

Most resources lack lightweight prompts that encourage reflection before teams commit to a specific AI direction.

EARLY EXPLORATION

To understand these gaps, we mapped designers’ ideation processes and storyboarded moments when they paused, questioned, or reframed their concepts.

Across these explorations, it revealed 3 key points in early AI ideation that shaped our first prototype.

Designers had difficulty translating loose concepts into concrete AI behaviors, found it hard to anticipate value tensions, and lacked lightweight cues that invited reflection before committing to a direction.

OPPORTUNITY

Designers did not struggle to generate AI ideas. They struggled to understand what “good” AI behavior looks like and how to reflect on the implications of their choices.

This revealed an opportunity to create a toolkit that helps designers externalize their thinking, ground concepts in real AI capabilities, and reflect on how those decisions align with human values.

I explored multiple ways this toolkit could integrate directly into designers’ existing workflows.

VERSION 1

The Web-based Toolkit

Guided by our mapping and storyboard, our first prototype included an AI capability library, use cases, value reflection cards, and evaluation sliders and matrix.

AI Capability Library

A selection list of the core capabilities of AI, adapted from CMU’s AI Brainstorming Kit.

Reflection Cards with Sliders

Prompts to surface human-AI value tensions.

Use Cases

Examples of use cases followed by questions to consider in order to encourage anticipatory thinking.

Evaluation Matrix

A structured way to assess feasibility and alignment with human values.

WHY IT DIDN'T WORK

Pilot Testing with 30 Design Professionals Surfaced Adoption Barriers.

Not integrated into designers' workflows.

Designers ideate in Figma, FigJam, and Miro. Leading those spaces created friction and reduced likelihood of adoption.

Sliders created false precision and undermined reflection.

Sliders produced false precision and shifted focus to debating numbers instead of discussing value tensions.

Digital format limited collaborative critique.

Educators and design teams needed printable and tangible materials for group critique so they could rearrange, sort, and cluster concepts.

VERSION 2

Figma Community Toolkit

We redesigned the toolkit as a Figma Community resource, placing it directly where designers ideate. Components became modular, tactile, and remixable to support reflection, collaboration, and critique within real workflows.

AI Capability Library

Refined layout of each definition, use cases, and added user guides around why these capability matters for concept reasoning.

Value Reflection Cards

Kept the prompts, removed the sliders, and made the cards fully moveable. We also enhanced our primary color palette for improved accessibility and contrast

Reflection Matrix Template

A quadrant canvas for mapping tensions, clustering insights, and documenting reasoning visually.

User Guides

Short guides were added to show how to browse capabilities, transition into values, and use the toolkit individually or as a team.

THE TOOLKIT

The redesigned toolkit supports designers where reflection naturally happens during ideation phase. It helps them articulate AI behavior early, identify value tensions before concepts solidify, and communicate decisions through shared artifacts. What started as a standalone website evolved into a collaborative resource shaped by the realities of real design practice.

REFLECTION

This project reinforced that designing for AI also means designing for uncertainty. Our assumptions about format and structure were challenged through pilot testing, and the strongest decisions came from acknowledging what was not working.

I learned how much reflective scaffolding designers need when reasoning about AI behavior. Moments of hesitation, confusion, and debate directly shaped the prompts, modularity, and structure of the final system. The toolkit only became useful once it mirrored real workflow habits, supported collaboration, and lowered the cognitive barrier to evaluating value tensions and implications.