SKILLS

Usability Testing, Interaction Design, Trust & Safety, Design Strategy

TEAM

4 Product Designers

1 Senior Researcher

TIMELINE

January 2025 - March 2025

3 Months

Built on Origami Studio and Lottielab.

PROBLEM & CONTEXT

Hiya launched an AI-powered calling app with features like spam protection, AI call summaries, automatic calendar event creation, and Incognito mode. Despite strong interest, adoption of core AI features lagged. Users hesitated because they didn’t always understand when AI was active or how decisions were made, which reduced trust in key features.

The design challenge was to make AI features discoverable, predictable, and trustworthy, while maintaining usability and privacy standards.

ROLE

Product Designer (Usability Research Lead)

I led usability research, synthesized insights into 4 actionable design recommendations, and presented them to the UX team, which influenced the Hiya AI Phone product roadmap. I collaborated cross-functionally with product designers, researchers, and engineers throughout the process.

IMPACT

Delivered 4 actionable design recommendations that directly informed product roadmap decisions for AI-powered calling features.

Reduced user hesitation in privacy-sensitive flows through clear indicators of AI activity and privacy state.

Increased confidence for users to act on scam warnings, privacy controls, and AI-generated events by clarifying trust signals and system behavior.

Revealed cross-platform inconsistencies and reliability gaps between Android and iOS users, informing fixes and onboarding improvements.

SOLUTION

These design recommendations addressed the key barriers in discoverability, clarity, and confidence by making Hiya AI's features easier to understand, predict, and trust.

TARGET USERS

Busy business professionals: small business owners, real estate agents, and service workers

They rely on their phones throughout the day and value productivity, control, and privacy. They are open to AI features, but only when the system feels predictable and trustworthy.

CONSTRAINTS

Design decisions had to work within live-product limitations: cross-platform inconsistencies between Android and iOS, privacy-sensitive requirements, and a product already launched.

Due to the time constraints, usability testing was limited to moderated sessions, so longitudinal insights were not collected.

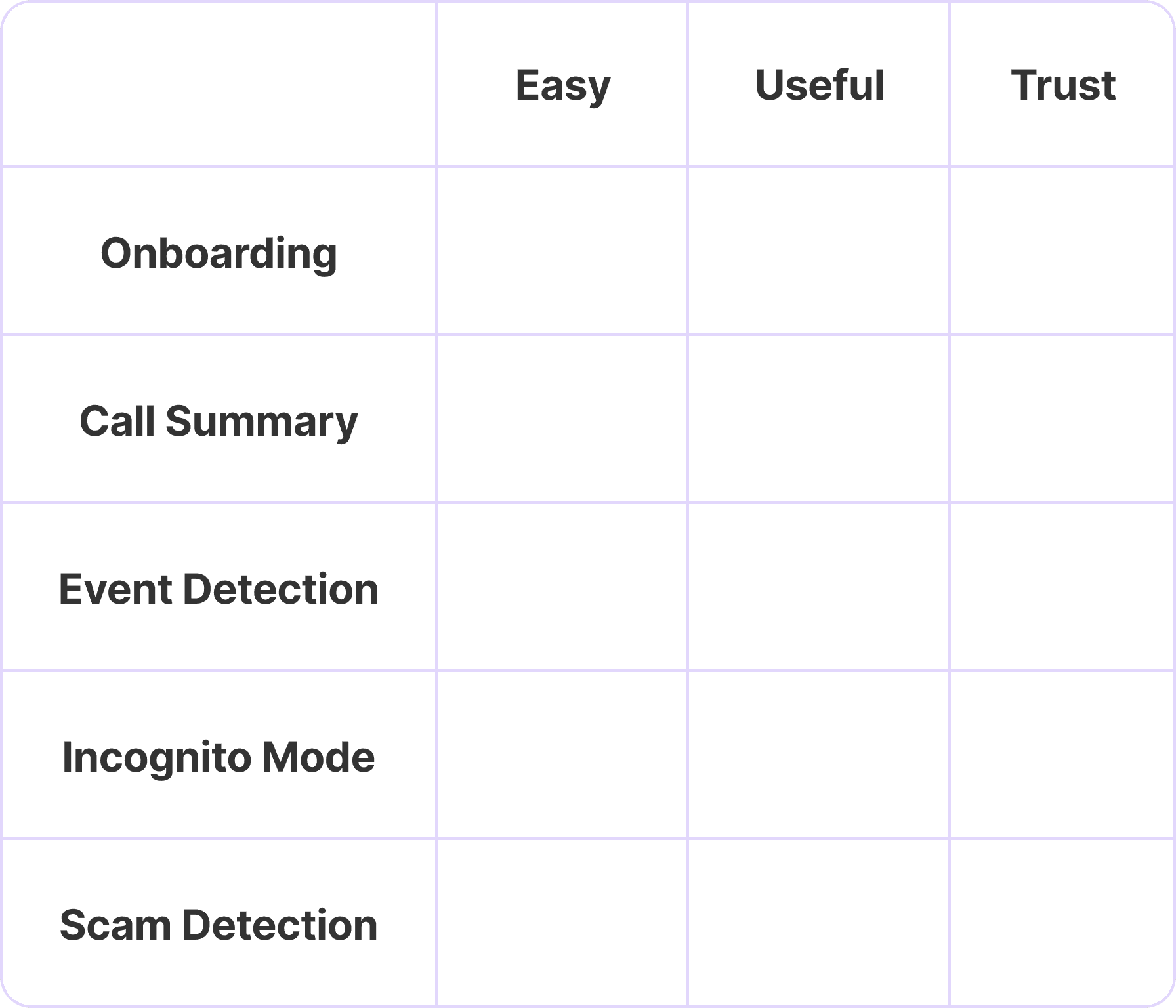

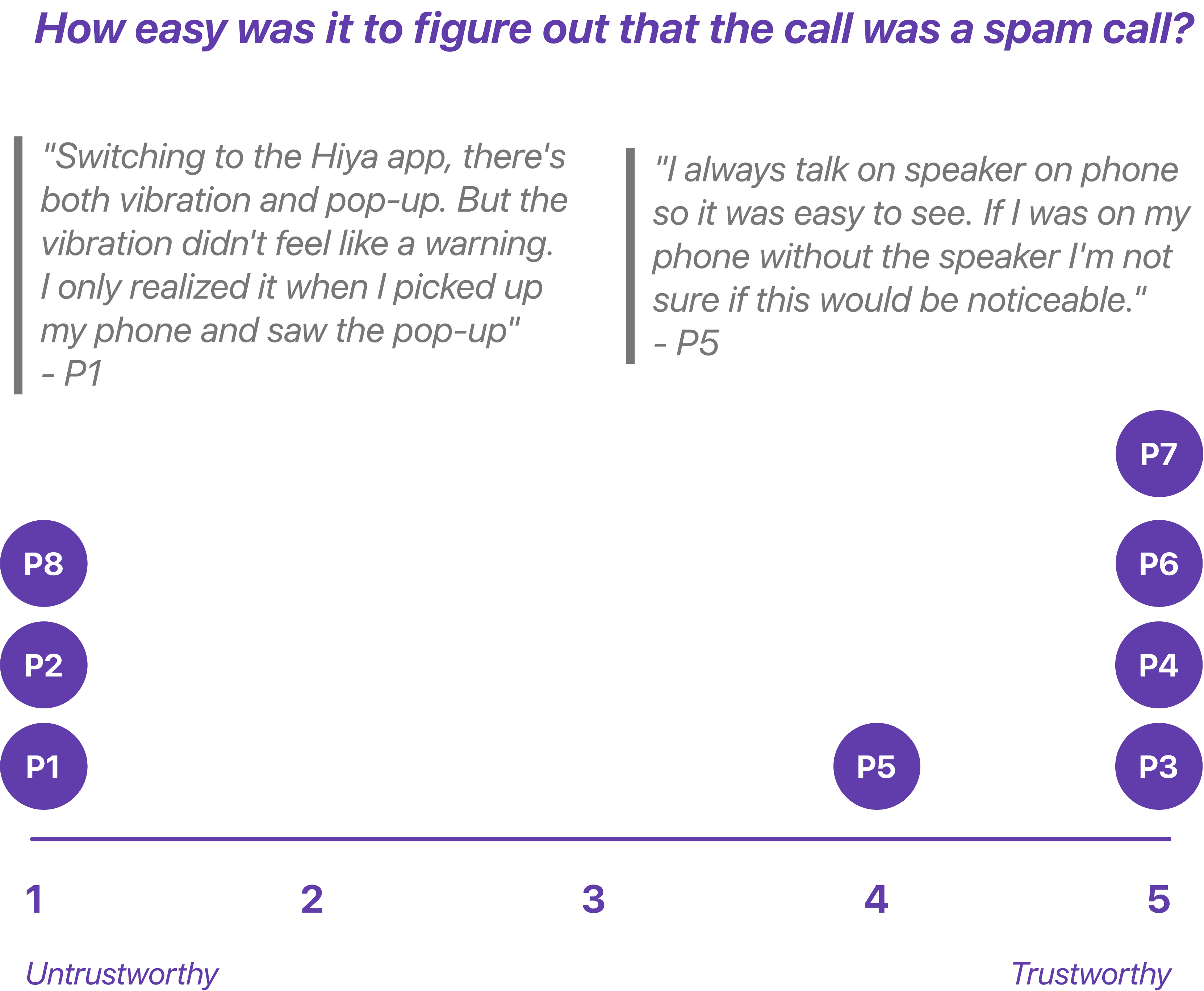

Likert Scale Responses

SUCCESS CRITERIA

Our design goals were to reduce user hesitation, improve clarity of AI activity, strengthen trust in privacy-sensitive features, and increase task success for key interactions like spam alerts, event creation, and Incognito mode.

RESEARCH APPROACH

We began with 8 exploratory questions covering onboarding, feature discovery, AI interactions, and calling workflows. After alignment with the UX team, we narrowed the scope to 4 questions most critical to adoption and trust.

Can users discover and understand Hiya’s core AI features, including scam detection, event creation, and incognito mode?

Did users know when AI was active and feel confident relying on it?

Can users complete in-call tasks (answering, managing, and summarizing calls) without hesitation or confusion?

Do users trust Hiya to protect their privacy and flag scam calls appropriately?

TRANSLATING OUR FINDINGS

We screened over 30 participants and conducted 10+ moderated usability sessions. Findings were synthesized using affinity mapping and severity scoring.

Affinity Mapping User Feedback

INSIGHT 1

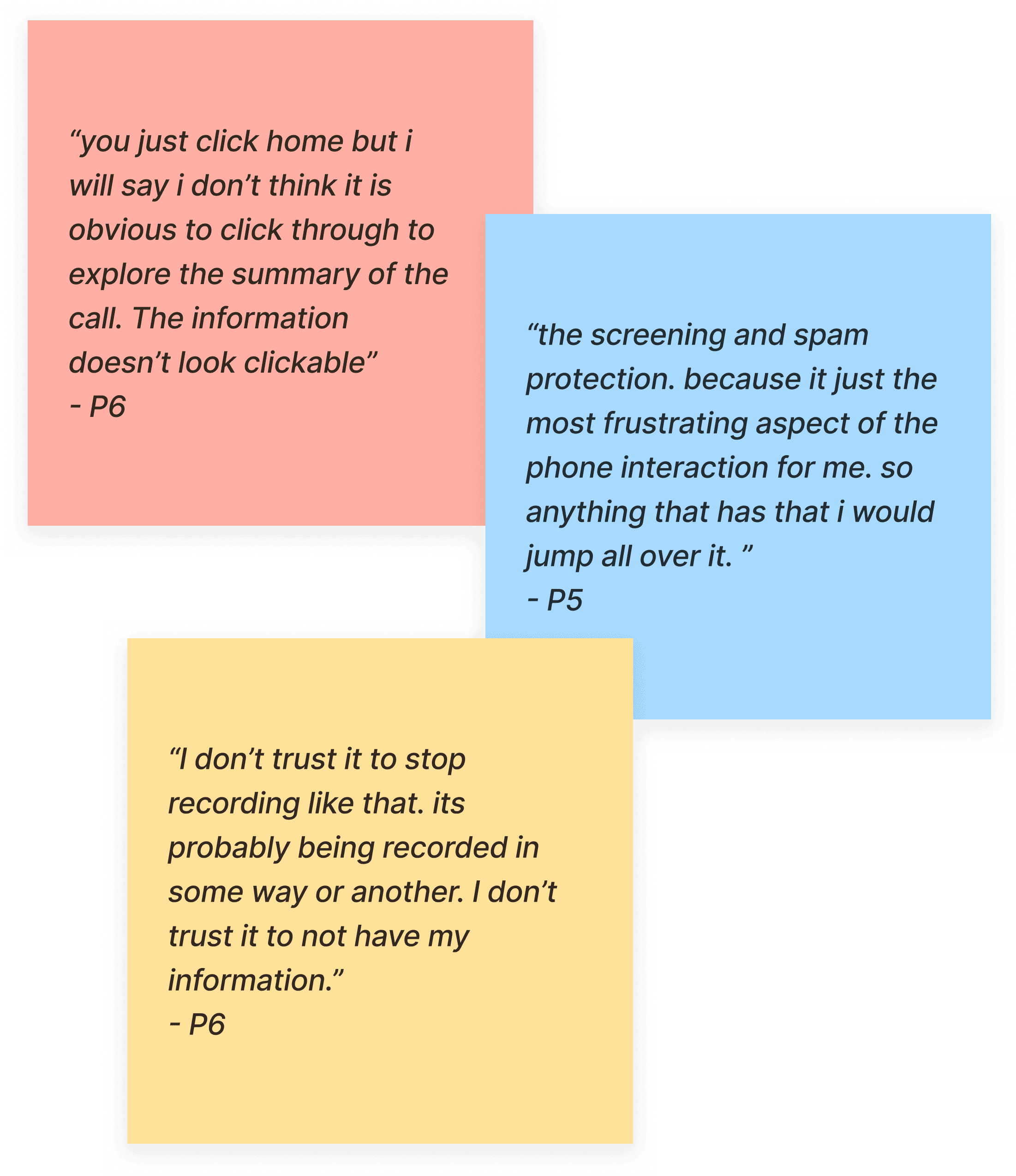

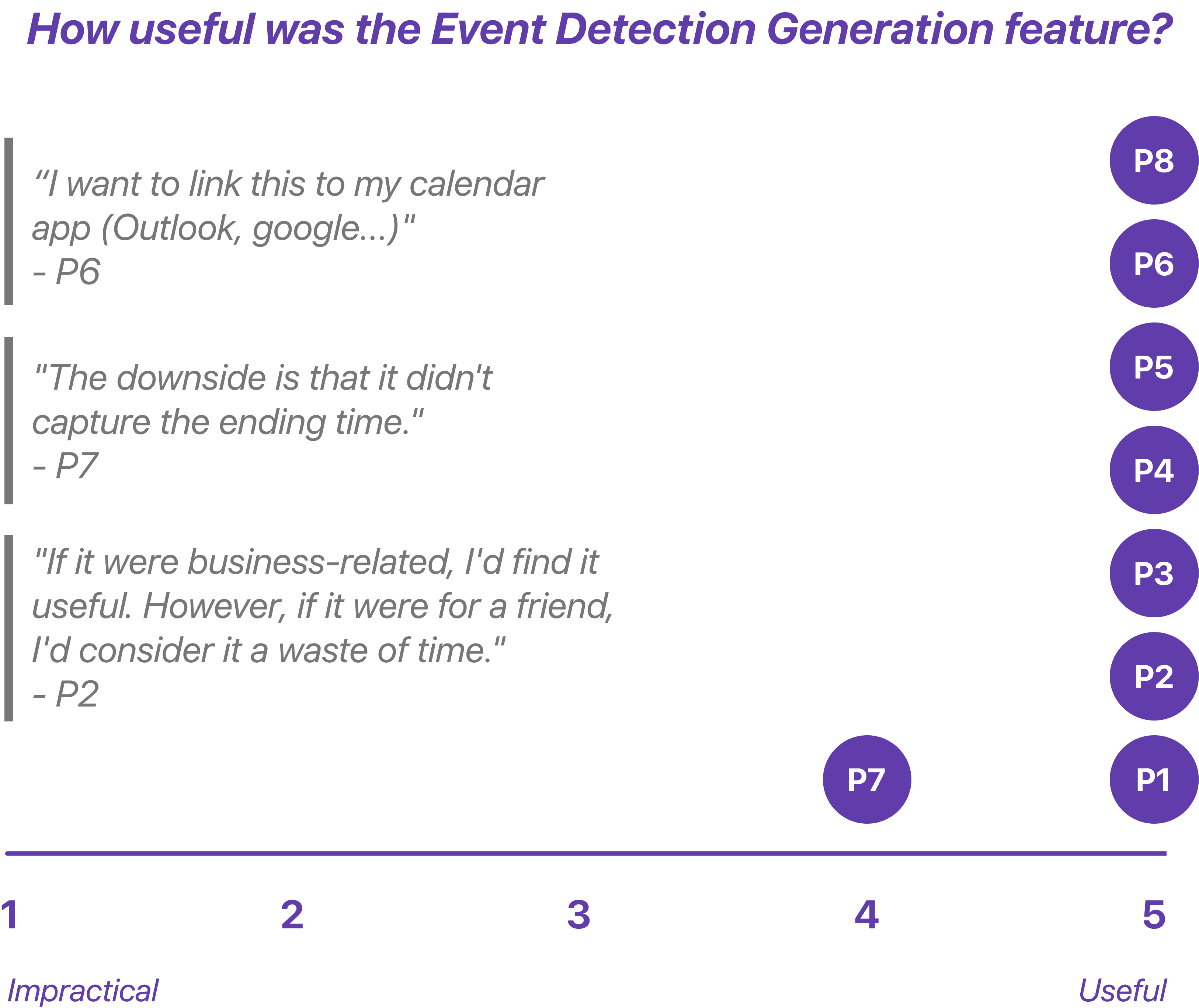

Calendar event detection was highly valued, but rigid controls and limited flexibility prevented users from relying on it in real workflows.

Rated 4.9 out of 5 for usefulness, but professionals couldn’t change end times, add guests, or sync it to Google/Outlook, which limited feature adoption.

BEFORE

Confusing and rigid event creation reduced clarity and control.

The calendar icon looked tappable, misleading people into unplanned detours.

Events showed only start times, leaving meetings open-ended and uneditable.

All events defaulted to the system calendar, frustrating users loyal to Google or Outlook.

AFTER

Users regained control and confidence through flexible event creation.

Added color-coded event states [Unconfirmed] & [Confirmed] for instant clarity.

Integrated a review and edit step to adjust titles, times, and details before saving.

Introduced third-party calendar integration so users could save events in their preferred calendar.

INSIGHT 2

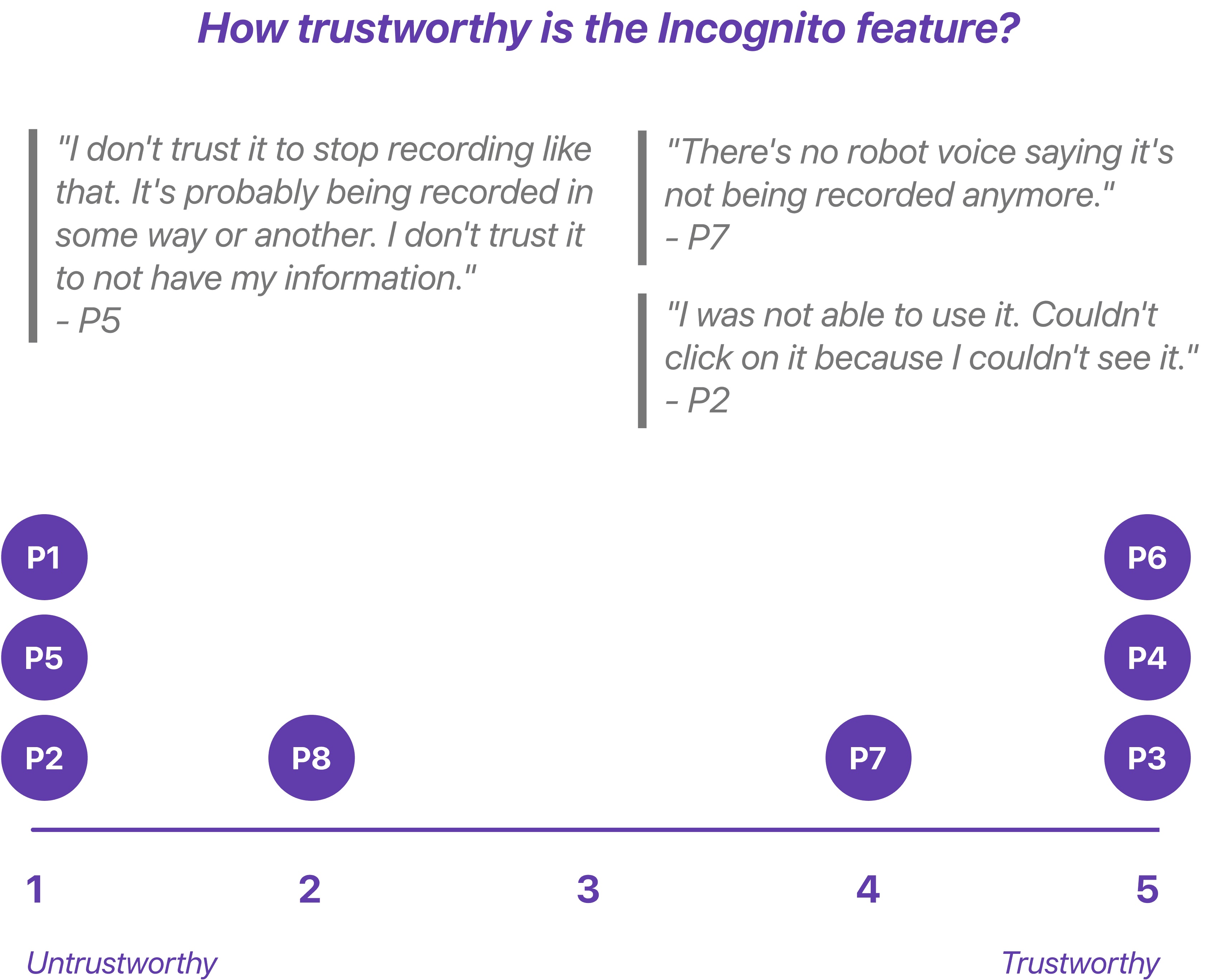

Incognito mode introduced more uncertainty than confidence due to missing and inconsistent trust signals.

6 of 8 found the feature, but only 4 trusted it. The lack of clear activation cues, inconsistent behavior across speaker and handset modes, and no confirmation after calls ended left users unsure whether privacy protections were actually in place.

BEFORE

Inconsistent behavior led to missing signals and distrust.

Inconsistent behavior led to missing signals and distrust. Discovery varied by speaker or handset mode, making activation unreliable.

No confirmation indicated whether recording had stopped, leaving users unsure the feature even worked.

AFTER

Clear confirmations turned uncertainty into trust.

Clear confirmations replaced uncertainty with trust. An onboarding intro explains how Incognito works and what protections it provides.

When active, users hear a verbal confirmation and see a visual pop-up confirming privacy is enabled.

INSIGHT 3

Spam alerts failed to earn trust because critical warnings were easy to miss and lacked explaination.

Visual and haptic cues went unnoticed by users, and the system did not clearly communicate why the call was flagged, leaving users uncertain whether they should act on the warning.

BEFORE

Weak visibility made critical warnings easy to miss.

Weak visibility made critical warnings easy to miss. The “Potential Scam Call” label was unnoticed below the number.

Users were forced to choose [Accept] or [Decline] with no context.

Subtle haptics failed to interrupt attention and AI gave no explanation for flagging spam → reducing trust.

AFTER

Alerts became visible and impossible to miss.

A pre-call banner with strong haptics and a distinct tone commands attention before connection.

A bold warning screen states risk and gives users time to decide safely.

An AI explainer surfaces the reasoning behind alerts: “We flagged this number based on reported spam patterns.”

REFLECTION

Hiya’s app was newly launched, and several technical issues surfaced during usability testing, including onboarding failures that blocked user progress. I partnered closely with engineers to surface these issues early, ensuring fixes could ship without delaying research. Our initial test plan was too long for a single session, so I worked with researchers and designers to condense the flow while preserving insights across clarity, confidence, and task success.

Looking ahead, Hiya is currently available only in the U.S., but international expansion will require adapting scam detection logic and trust cues to local norms. Spam patterns, regulatory expectations, and interpretations of trust signals vary widely, and thoughtful localization will be essential to maintain trust and usability across markets.